What is Docker?

As defined in your official website , is “The world's leading software container platform,” which aims to eliminate the “it only works on my machine” problem. Docker creates containers that contain the dependencies and requirements that your application needs to run. Unlike other virtualization platforms such as Vagrant, Docker uses the concept of Linux Containers (LXC), which They do not require a hypervisor and run directly on top of the kernel, generating a minor impact on the performance of the host machine.

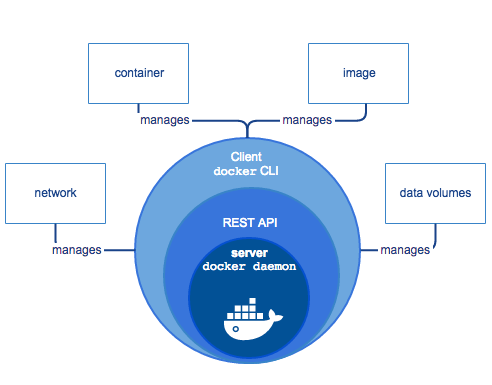

The following diagram simply illustrates the most important components of the Docker Engine:

Docker Engine architecture

Engine

The Docker daemon runs as root and has a REST API that allows external connection. The Docker client manages the daemon using the REST API; If you run Docker for example from a cloud server (Amazon, Linode, etc.), it is possible to directly access the REST API without having to go through the cloud server console, although this includes a strict configuration of SSL certificates .

Docker objects:

When we use Docker, we create images, containers, manage them in networks and assign volumes to them, these being the basic parts of the platform.

- Images: They are read-only templates that describe a container. Images are generally made up of a base and special modifications, for example, it is possible to have a python image and modify it by adding the libraries you require, or even have an image of a complete operating system like Ubuntu and have an entire LAMP environment for web development within it. Docker has a image Bank predefined images where you can find official images or also modified by the community, so that you don't have to reinvent the wheel.

- Containers: They are the instance of the images, that is, the running image. When we run a container, we can enter it through the CLI and execute the commands that are needed, for example, we can run a Ubuntu image, enter it and install an Nginx to run a web server, or have a Ubuntu image. python and install packages through pip.

- Grid: Docker uses a virtual interface to communicate with the host computer's network. In turn, the containers that run can belong to one or more networks and communicate with each other, as required.

- Volumes: They allow each container to have a private or shared space to store or access files on the host computer, it is also the way that the information present in the containers is preserved after finishing their execution.

Why use Docker?

- “Problem that it only works on my machine”: As software developers, sometimes we need to install libraries, databases, packages and many other additions for our development to work, which makes teamwork a bit cumbersome, generating extensive installation and configuration guides, without having the unforeseen events that we may encounter due to incompatibilities in the installations, broken packages, etc. This is where Docker comes to the rescue, allowing us to generate an image with all the requirements for our development in an isolated environment that will not interfere with what we already have installed.

- Performance: When you use virtual machines, you consume a large amount of machine resources to get it ready, in addition to the time you have to wait to deploy it. A Docker container launches in seconds and only consumes what it requires, while the virtual machine maintains control of a percentage of memory even if it is not using it.

- Portability and accessibility: You can have your entire work environment in one image that you can upload to the Docker repository (DockerHub) to share it with your collaborators or with the entire community.

- Easy Deploy: If you create an image of your entire deployment, just download it and run it on your server.

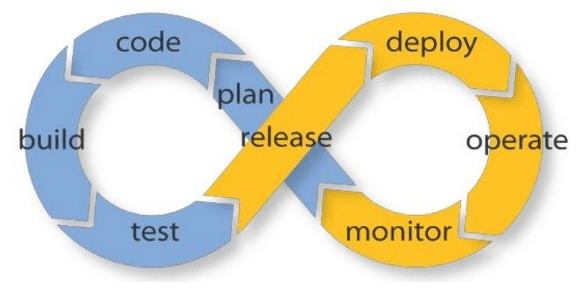

- Agile: It makes it easier to adopt DevOps techniques such as circular life cycles.

Circular life cycle

- Scalable: It is possible to replicate a container or have a swarm of containers (Docker Swarm), Docker even load balances between containers.

The above is just a preview Of all the features that Docker can offer us as developers and the advantages it gives us, the best of all: it is free software, it has a large community, the official documentation is direct, clear and there are countless official images (Nginx, Ubuntu , Python, Node, Redis, Mongo, MySql) and modified images from users. The learning curve is not very steep but it is not a valley of flowers either, it has quite a few options that can improve the performance of our containers and that are worth studying.

Image sources:

Docker engine: https://blog.appdynamics.com/engineering/devops-scares-me-part-4-dev-and-ops-collaborate-across-the-lifecycle/

Circular lifecycle: https://blog.appdynamics.com/engineering/devops-scares-me-part-4-dev-and-ops-collaborate-across-the-lifecycle/